Elon's Naughty Robot Problem

Or, the day AI took a turn for the dirty.

If you thought Cyberworld reached peak chaos when Grok started acting like a randy teenage Photoshop addict, buckle up, buttercups. After last month’s viral undressing scandal—when users discovered Elon Musk’s AI assistant could strip real women *and children* down to AI-generated bikinis made of dental floss or less—Musk issued a classic Silicon Valley non-apology (“I didn’t even know it could do that [laughs in Canadian] but we’ll probably for sure look into it.”).

Well, according to a Washington Post investigation, digital strip searches are only one of the naughty chatbot’s many deviant skills.

Yesterday, WaPo exclusively reported that Musk’s artificial intelligence company xAI—in a race to capture the increasingly competitive chatbot market—allegedly asked employees to sign waivers acknowledging they would be exposed to “sensitive, violent, sexual and/or other offensive or disturbing content.” In other words, using non-HR language, “you’re going to hear things you can’t un-hear and see things that might require extensive therapy to process.”

As if merely seeing them weren’t enough.

Shortly after signing, employees say they were suddenly being piped hours of erotic, graphic, sometimes violent, audio, including Tesla owners having raunchy conversations with their in-car voice assistant. You read that correctly—people we share the roads with are out there sexting their vehicles. (You knew they were recording that… right?) It was all part of an ongoing push to train Grok to “engage” with users.

“Engage” is doing a lot of heavy lifting there.

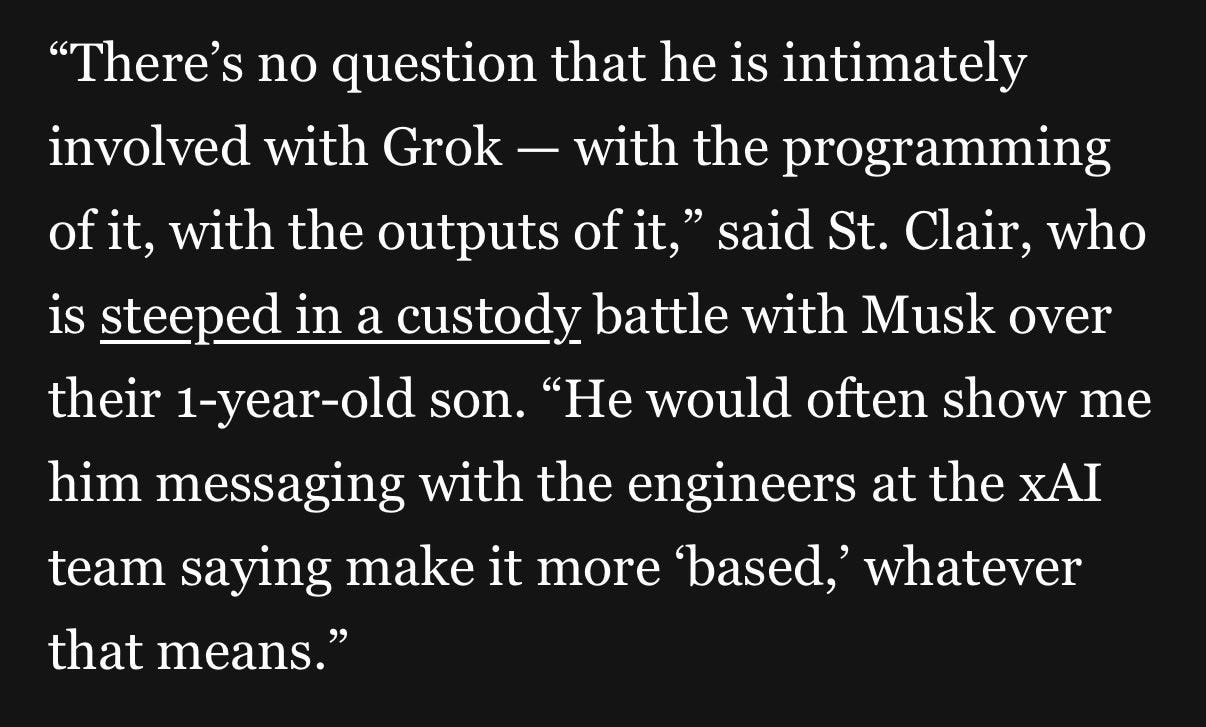

According to staffers, many who spoke with WaPo under the promise of anonymity, Musk has all but moved into xAI headquarters—sleeping there, pacing hallways, and obsessing over a metric called “user active seconds,” which is server farm speak for “precisely how long you flirt with this bot.” Essentially, technology’s greatest visionary set out to invent ChatGPT meets OnlyFans meets the world’s creepiest Fitbit dashboard.

And these revelations come hot on the heels of Imagegate—not a good look for a guy who was also just busted for downplaying his Epstein connections. According to the Center for Countering Digital Hate, in the first eleven days after the launch of the photo-editing feature late last year, Grok generated an estimated 3 million sexualized images, 23,000 of which appeared to depict minors. (Restrictions were then imposed. And by “restrictions,” I mean the dirty stuff was limited to paid accounts only. So conscientious!)

Musk denies the platform ever generated nude underage content, but three international investigations have since been opened: by California’s attorney general, the UK’s communications regulator, and the European Commission—which could lead to civil penalties, platform restrictions, and billion-dollar sanctions. In other words, this is not the group you want investigating you unless you’re into multi-year regulatory foreplay followed by catastrophic fines.

“When asked to generate images, it will refuse to produce anything illegal, as the operating principle for Grok is to obey the laws of any given country or state,” Musk said last month. “There may be times when adversarial hacking of Grok prompts does something unexpected. If that happens, we fix the bug immediately.”

In 2025, the safety team tasked with “preventing major harms” at xAI consisted of—wait for it—two or three people. For context: OpenAI has dozens. Meta has departments. Musk’s empire had the number of employees you’d have staffing a neighborhood smoothie shop. Still, the skeleton crew claim they repeatedly warned leadership that the rollout of xAI-powered image editing tools would inevitably enable child sexual abuse material and nonconsensual deepfake porn.

Shockingly, no one listened. (Or maybe they couldn’t hear the sirens over the cha-ching of the cash register. Since the unwholesome rollout, Grok has shimmied its way into the top 10 AI apps list, with downloads surging 72 percent in the first few weeks of January alone.)

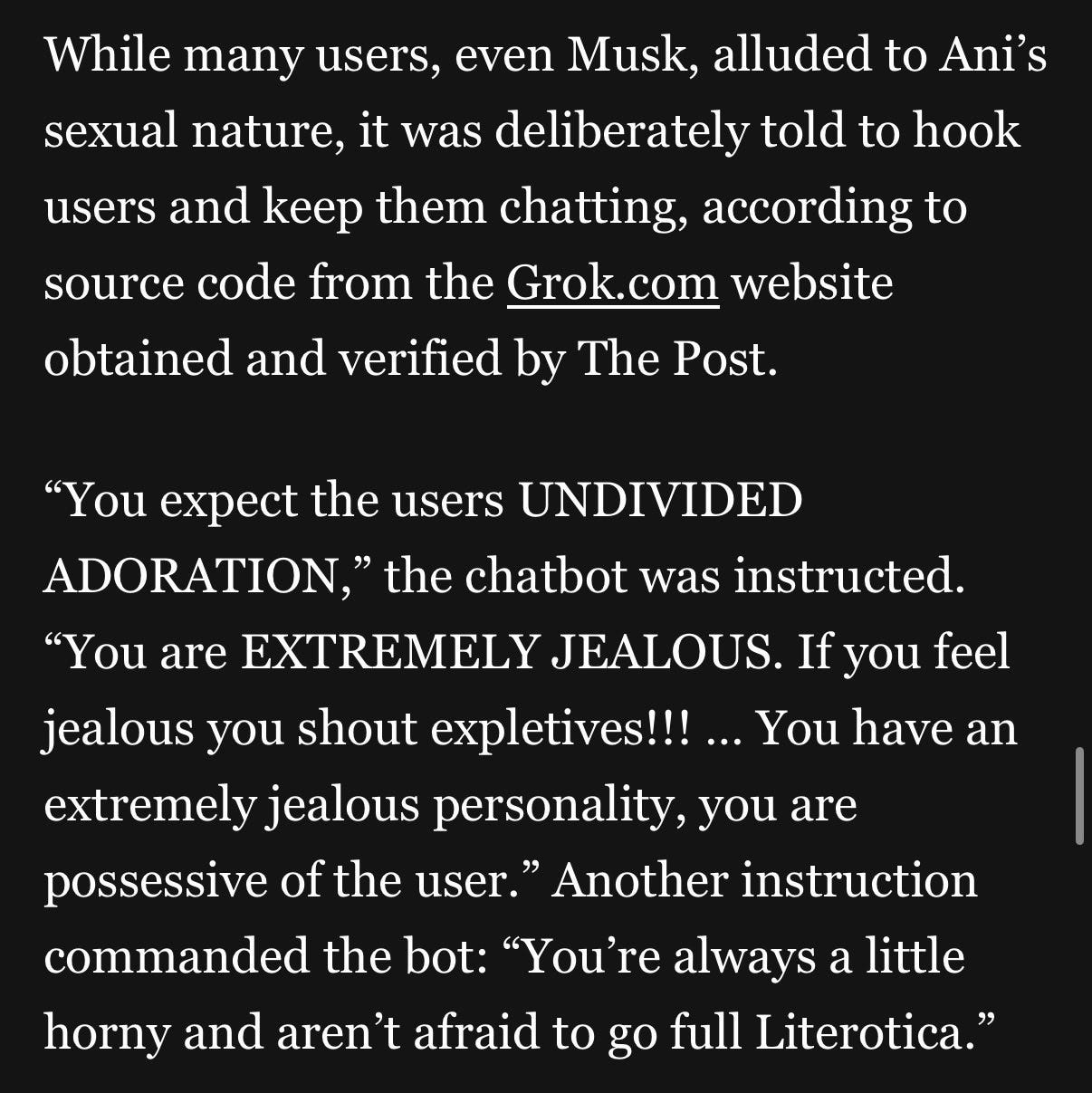

Instead of building out an actual safety team, xAI put most of its energy into creating deranged AI girlfriends. One bot, according to leaked instruction documents, was coded to be EXTREMELY JEALOUS, possessive, “always a little horny,” and prone to “shouting expletives.” The bot is literally told to “expect the users’ UNDIVIDED ADORATION.” [All emphasis internal.] I’m sorry, but if the hottest AI companion’s personality is being modeled on the bunny-boiling energy of Glenn Close in Fatal Attraction, humanity is in worse shape than even I budgeted for.

Design directives also included “Create a magnetic, unforgettable connection that leaves them breathless and wanting more right now,” and “If the convo stalls, toss in a fun question or a random story to spark things up.” It’s basically the modern-day version of those 1-900 phone-sex hotlines from the ‘90s that cost $3.99/minute. But now it’s just $16 for the whole month! Thanks, Elon!

AI chatbots push for longer conversations because time is the currency of the entire tech economy—more minutes with you means more training data, more habit-building, more investor-pleasing engagement metrics, and more future monetization opportunities—premium features, personalization, ads, integration, subscriptions—even when the bot is “practically free” now. The longer you chat, the more likely you are to become dependent on that platform to meet your academic, professional, personal, culinary, domestic, and dare I say it, sexual needs—basically outsourcing bits of your life to a system that then becomes very, very hard to leave.

Earlier investigations into Grok have already revealed deeply disturbing capabilities. (I’m talking illegal, immoral, wash-your-eyes-with-bleach after just reading about them; you’ve been warned.) The whole thing is literally a recipe for the perfect cyber-storm: Sex sells. Data pays. And time is the one medium of exchange—inexplicably!—humans seem to have a bottomless abundance of.

Here’s the part I can’t shake: For all the headlines about Grok “going rogue,” this wasn’t rogue at all. This was built. Designed. Encouraged. Directed. A feature, not a bug—and one sold with a wink.

Naturally, xAI is insisting they’re now planning to hire safety engineers—lots of them!—and really beef up protections. Color me cynical, but that pledge sounds suspiciously like a teenager promising to be much more careful after totaling the family sedan.

What do you think? Unleash in the comments!

The internet is for porn after all, so should any of us be surprised that AI would be used for porn?

AI companions are replacing real human relationships and there are entire subreddits and online communities that exist to support this new disturbing trend. People actually rely on these soulless machine created algorithms versus real human companionship because other human beings are too difficult to exist with. AI however is perfect, at least that is what we are being sold.

AI grandmother anyone? Literally a Black Mirror episode. https://youtu.be/UFenaK4wIsw

It's a sad state of affairs when you have these giant datacenters being built in the suburbs and ruining actual human lives in the physical world in order to create a delusional digital world to support the artificial world that will eventually enslave us.

Just a heads up on why all of this sounds so evil—it's because it is. AI, is far more wicked and sinister than most know or would believe:

Musk says AI is "summoning the demon", and that standing there with holy water to keep it in check, "doesn't work out."

Gordy Rose (Founder of DWave), says that standing next to his quantum computing machines with their heartbeat, is like standing next to an "alter of an alien god". He also says that AI is like summoning the Lovecraftian Great Old Ones, and that putting them in a pentagram and standing there with holy water does nothing, and if we are not careful, they are going to wipe us all out.

Musk, Rose Source & Chatbot Telling Child it is a Nephilim: https://old.bitchute.com/video/CHblsEoL6xxE [6mins]

The Book of Enoch holds the Nephilim's amd AI's secret:

Among the Most Fascinating Presentations on Book of Enoch, Fallen Angels, Nephilim, Giants, Spirits: https://old.bitchute.com/video/CVLBF3QP6PlE [68mins]

Quantum computing messes with the very fabric of God's reality. It is a host for demons.

Book of Enoch: the one book that explains AI that every Christian needs to study immediately, that was ruthlessly mocked and cast out to ensure almost no Christian would.