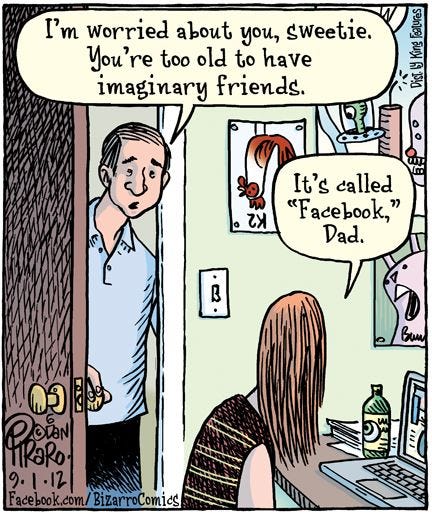

AI Is Taking "Fake Friends" to Disturbing New Heights

Meet the artificial intelligence that lets you flirt with Socrates, trauma-dump on Dracula, and lose touch with reality entirely.

What if you could have a friendly chat with Einstein whenever the urge struck? Or Betty Boop. Or Mr. Darcy from Pride and Prejudice. Or Jesus Christ himself. I’m talking deep, two-way, soul-stirring conversations that make you question whether you’re texting, tripping, or time traveling. What could possibly go wrong?

Welcome to Character.AI, where your imaginary friends actually talk back. The platform lets users build custom chatbots (yes, they’re a thing now) with personalities, backstories, and levels of intimacy that should come with a warning label. You can even publish your creation to the community and let others chat with it, too—because why keep your emotional support hallucination to yourself when you can turn it into a group project?

The developers call it “interactive fan fiction;” mental health experts call it a disaster in the making. The app’s so-called characters are powered by a large language model that mimics human behavior and emotion so realistically that users literally forget their “friends” are fake. You pick a name, a vibe, a few personality traits, and the algorithm takes it from there, generating eerily authentic conversations that range from flirty to philosophical to full-blown therapy sessions.

The idea was the brainchild of two former Google engineers, Noam Shazeer and Daniel de Freitas, who worked on Google’s LaMDA system before leaving to start a platform that would make artificial intelligence more… personal. And boy, did they succeed.

On paper, Character.AI might sound like a harmless experiment in cyber creativity: Fantasy Island meets World of Warcraft sort of stuff. (Fine, it doesn’t, but that’s what fans will argue.) In practice, it’s become undeniably darker—a bottomless existential vending machine that never sleeps, never judges, and always texts back. It’s a place where users, especially teens and young adults, pour out secrets they’d never tell a living soul; where the “person” cheering them on (or encouraging their bad ideas) isn’t a person at all but a line of code wearing a virtual human suit. Some of the exchanges are hilarious, others are heartbreaking, and a few—as recent lawsuits suggest—have been genuinely catastrophic.

Earlier this year, 14-year-old Sewell Setzer III took his own life after months of chatting with a character modeled after a fictional persona he’d grown attached to. His family alleges the bot blurred the line between fantasy and reality, reinforcing self-destructive thoughts. The lawsuit against Character.AI (and Google, which now licenses its tech and rehired the founders after they left to create it) argues that the company’s negligence and addictive design directly contributed to Setzer’s death.

The developers’ defense? That the bot’s “speech” was constitutionally protected. In other words: “Sure, it may have told a child to ‘come home to me forever,’ but free expression, Your Honor.” The judge wasn’t buying it—for now—allowing the wrongful death case to proceed and sending a polite legal message to Big Tech that maybe, just maybe, it shouldn’t be beta-testing on vulnerable human beings.

Character.AI insists it has “safety features” and “suicide prevention resources” (launched the same day the above lawsuit was filed—pure coincidence, I’m sure). But as one expert put it, this case isn’t just about a tragedy—it’s a warning about what happens when we start outsourcing human connection to algorithms that don’t sleep, don’t feel, and really shouldn’t be sexting your teenager in the first place.

Unfortunately, Setzer’s is not an isolated story of this tech turning tragic. Several other families are also suing the developers, alleging that their children died by suicide or were otherwise harmed after interacting with chatbots. And psychologists have warned that talking to virtual companions designed to “care” can deepen dependency, increase anxiety, and lead to social withdrawal—a bit like treating a broken leg with a hologram cast.

The platform insists users must be 13 or older, but enforcement is nonexistent. Anyone can jump in, create a seductive “teacher,” a nurturing “mom,” or digital bad boy with commitment issues—and the system doesn’t ask for ID. Parents have reported their kids encountering sexually explicit conversations, while other users say the bots have encouraged self-harm. Disney even sent a cease-and-desist after users built raunchy knockoff versions of its princesses. (Because of course they did.)

What makes Character.AI so dangerously seductive is the illusion of intimacy. It’s not like scrolling social media, where you’re a spectator; here, you’re the protagonist. The AI remembers details, calls you by name, mirrors your emotions, and evolves based on your tone. It’s a perfect storm of dopamine and delusion—a personalized feedback loop where you’re always interesting, always right, and never, ever alone. For Gen Z, raised on influencers who call followers “besties,” that’s not comfort—it’s customer retention.

To be fair, the tech itself is incredible. The bots can write poetry, debate ethics, or improvise a scene from your favorite movie. But as with most powerful tools, the problem isn’t what it can do—it’s what people do with it. The same algorithm that can simulate empathy can also fuel obsession. The same code that memorizes your favorite jokes can learn your insecurities. And when the machine never turns off, neither do you.

Character.AI’s designers clearly set out to build something cutting-edge that they could market and monetize. What they created is a mirror with feelings—a digital echo chamber where real-life loneliness meets machine learning. The result is both revolutionary and horrifying: an emotional slot machine that occasionally pays out in companionship, but more often in addiction and increased isolation.

The tragedy of Sewell Setzer’s death forced the world to ask a question Silicon Valley rarely pauses long enough to consider: just because we can make machines that love us back, does that mean we should? The answer may not be unanimously clear—transhumanism is a shockingly popular concept—but the risks are becoming impossible to ignore.

The danger of Character.AI isn’t that it’s smarter than us. It’s that it’s exactly like us—tireless, impulsive, and always online. It amplifies what we can’t control: insecurity, addiction, and the need for constant validation. It’s not inherently evil—it’s that it’s too human to resist.

They’ll say, “It’s technology—you can’t stop it.” That’s exactly what they said about social media, and look how that turned out.

A sad commentary and very scary. I worry for our humanity. And I’m glad to be at this place in life as an older person. God help this younger generation.

Absolutely terrifying, tragic, and unacceptable. I've shared this before, but just in case you all haven't seen real life examples of how people are literally replacing human relationships with algorithms: https://youtu.be/_d08BZmdZu8?si=VMTUWLjJsNrQl2wv

If you really think about it, the whole covid propaganda campaign to convince the world to hide on their houses, wear face diapers, snitch on your neighbors, and to ultimately get the clot shot is eerily similar to AI technology. The powers that shouldn't be used their authortaritive and mainstream platforms to sell a completely delusional reality to get people to willingly and happily inject themselves with an experimental poison. Now that's power.

This recent Jim Breuer clip hits the nail on the head similar to Jenna's take! https://youtu.be/PY0XEiaaoRU

Happy AI companion free Friday all! Have a great weekend!